Ensemble Models: Boosting Techniques

Overview

- Boosting is a sequential process, where each subsequent model attempts to correct the errors of the previous model. The succeeding models are dependent on the previous model.

- In this technique, learners are learned sequentially with early learners fitting simple models to the data and then analyzing data for errors. In other words, we fit consecutive trees (random sample) and at every step, the goal is to solve for net error from the prior tree.

- When an input is misclassified by a hypothesis, its weight is increased so that next hypothesis is more likely to classify it correctly. By combining the whole set at the end converts weak learners into better performing model.

- Let’s understand the way boosting works in the below steps.

- A subset is created from the original dataset.

- Initially, all data points are given equal weights.

- A base model is created on this subset.

- This model is used to make predictions on the whole dataset.

- Errors are calculated using the actual values and predicted values.

- The observations which are incorrectly predicted, are given higher weights. (Here, the three misclassified blue-plus points will be given higher weights)

- Another model is created and predictions are made on the dataset. (This model tries to correct the errors from the previous model)

- Thus, the boosting algorithm combines a number of weak learners to form a strong learner.

- The individual models would not perform well on the entire dataset, but they work well for some part of the dataset.

- Thus, each model actually boosts the performance of the ensemble.

We will discuss 3 major boosting models: AdaBoost, Gradient Boost and XGBoost.

AdaBoost

1. Definition

- AdaBoost is an iterative ensemble method. AdaBoost classifier builds a strong classifier by combining multiple poorly performing classifiers so that you will get high accuracy strong classifier.

- The basic concept behind Adaboost is to set the weights of classifiers and training the data sample in each iteration such that it ensures the accurate predictions of unusual observations.

- Any machine learning algorithm can be used as base classifier if it accepts weights on the training set.

Stump: a tree with only 1 node and 2 leaves; Generally stumps does not perform as good as forest does; The AdaBoost uses the forest of stumps- AdaBoost should meet two conditions:

- The classifier should be trained interactively on various weighed training examples.

- In each iteration, it tries to provide an excellent fit for these examples by minimizing training error.

- Complete Procedure

- Assign each sample with a weight (initially set to equal weight)

each row in Dataframe has a equal weight - Use the feature selection in decision node method to choose the first stump;

- Measure how well a stump classifies the samples using:

where is the weight of and is the set of misclassified datapoints - Determine the vote significance for the stump using

- Laplace smoothing for the vote significance:

in case Total Error = 1 or 0, the formula will return error, we add a small value in the formula - Modify the weight of samples so that next stump will take the errors that current stump made into account:

6.1 Run each sample down the stump

6.2 Compute new weight using:- Formula:

= New Sample Weight = Current Sample weight. = Amount of Say, alpha value, this is the coefficient that gets updated in each iteration and = place holder for 1 if stump correctly classified, -1 if misclassified.

6.3 Normalize the new weights

- Formula:

- With the new sample weight we can either:

- Use

Weighted Gini Indexto construct the next stump (Best feature for split) - Use a new set of sample derived from the previous sample:

- pick until number of samples reach the size of original set

- construct an interval-selection scheme using the sum of new sample weight as cutoff value

if a number falls in i-th interval between (0,1), choose i-th sample;

- e.g (0-0.07:1; 0.07-0.14:2; 0.14-0.60:3;0.60-0.67:4; etc) - randomly generate a number x between 0 and 1

pick the sample according to the scheme (note that the same sample can be repeatly picked)

- construct an interval-selection scheme using the sum of new sample weight as cutoff value

- pick until number of samples reach the size of original set

- Use

- Repeat Step 1 to 7 until the entire forest is built

- Assign each sample with a weight (initially set to equal weight)

2. Pros and Cons

Pros

- Achieves higher performance than bagging when hyper-parameters tuned properly.

- Can be used for classification and regression equally well.

- Easily handles mixed data types.

- Can use "robust" loss functions that make the model resistant to outliers.

- AdaBoost is easy to implement.

- We can use many base classifiers with AdaBoost.

- AdaBoost is not prone to overfitting.

Cons

- Difficult and time-consuming to properly tune hyper-parameters.

- Cannot be parallelized like bagging (bad scalability when vast amounts of data).

- More risk of overfitting compared to bagging.

- AdaBoost is sensitive to noise data.

- It is highly affected by outliers because it tries to fit each point perfectly.

- Slower as compared to XGBoost

3. Comparison with Random Forest

- Random Forest VS AdaBoost (Bagging vs Boosting)

- Random Forest uses full grown trees while Adaboost uses stumps (one root node with two leafs)

- In a Random Forest all the trees have similar amount of say, while in Adaboost some trees have more say than the other.

- In a random forest the order of the tree does not matter, while in Adaboost the order is important (especially since each tree is built by taking the error of the previous error).

4. Sample Code

1 | import pandas as pd |

GBM (Gradient Boosting)

Gradient Boosting trains many models in a gradual, additive and sequential manner (sequential + homogeneous).

Major Motivation: allows one to optimise a user specified cost function, instead of a loss function that usually offers less control and does not essentially correspond with real world applications.

Main logic: utilizes the gradient descent to pinpoint the challenges in the learners' predictions used previously. The previous error is highlighted, and, by combining one weak learner to the next learner, the error is reduced significantly over time.

Procedure:

For Regression

- Start by Compute the average of the

, this is our 'initial prediction' for every sample - Then compute the

; : Pseudo Residual at i-th sample : True value of i-th sample : Estimated value of i-th sample (here in first iteration)

- Construct a new decision tree (fixed size) with the goal of predicting the residuals (a DT of

, not the true value!!!) - If in a leaf, # of leaves < # of samples, then put the

of samples that fall into same category into the same leaf; Then take average of all values on that leaf as output values; - Compute the new predicted value (

) : Newly Estimated value of i-th sample : learning rate, usually between 0 ~ 0.1 : Estimated pseudo residual values (deduced from the decision tree)

- Compute the new

of each sample = - Construct the new tree with the new pseudo residual

in step 5: - Repeat step 2, 3

- Compute the new predicted value

(here is deduced from a new DT): - Compute the new pseudo residual of each sample

- Loop through the process UNTIL: adding additional trees does not significantly reduce the size of the pseudo residuals

For Classification

- Set the initial prediction

for every sample using ( is th probability of a sample being classified as 1) : (same for every sample) : log(odds) prediction for i-th sample, initially the same value, but value for each sample will change upon future iterations - Using logistic function for classification:

;

- Decide on the classification: if

> threshold, then "Yes"; else "No"; here the threshold may not be 0.5 (AUC and ROC to decide on the value); - Compute

- Build a DT using the pseudo residual

- Transformation of the pseudo residual to obtain the output values on each leaf:

- e.g: if a leaf has (0.3, -0.7),

then the leaf output value

- Compute the new prediction

: log(odds) prediction for i-th sample in new iteration : learning rate, usually between 0 ~ 0.1

- Compute the new Probability

- Compute the new predicted value for each sample;

- Compute the new pseudo residual for each sample;

- Build the new tree;

- Loop until the pseudo residual does not change significantly;

- Start by Compute the average of the

Early Stopping: Early Stopping performs model optimisation by monitoring the model’s performance on a separate test data set and stopping the training procedure once the performance on the test data stops improving beyond a certain number of iterations.- It avoids overfitting by attempting to automatically select the inflection point where performance on the test dataset starts to decrease while performance on the training dataset continues to improve as the model starts to overfit. In the context of gbm, early stopping can be based either on an out of bag sample set ("OOB") or cross- validation ("cv").

1. Pros & Cons

Pros

- Robust against bias/outliers

- GBM can be used to solve almost all objective function that we can write gradient out, some of which RF cannot resolve

- Able to reduce bias and remove some extreme variances

Cons

- More sensitive to overfitting if the data is noisy

- GBDT training generally takes longer because of the fact that trees are built sequentially

- Prone to overfitting, but can be overcame by parameter optimization

2. AdaBoost vs GBM

- Both AdaBoost and Gradient Boosting build weak learners in a sequential fashion. Originally, AdaBoost was designed in such a way that at every step the sample distribution was adapted to put more weight on misclassified samples and less weight on correctly classified samples. The final prediction is a weighted average of all the weak learners, where more weight is placed on stronger learners.

- Later, it was discovered that AdaBoost can also be expressed as in terms of the more general framework of additive models with a particular loss function (the exponential loss).

- So, the main differences between AdaBoost and GBM are as follows:

- The main difference therefore is that Gradient Boosting is a generic algorithm to find approximate solutions to the additive modeling problem, while AdaBoost can be seen as a special case with a particular loss function (Exponential loss function). Hence, gradient boosting is much more flexible.

- AdaBoost can be interepted from a much more intuitive perspective and can be implemented without the reference to gradients by reweighting the training samples based on classifications from previous learners.

- In Adaboost, shortcomings are identified by high-weight data points while in Gradient Boosting, shortcomings of existing weak learners are identified by gradients.

- Adaboost is more about 'voting weights' and Gradient boosting is more about 'adding gradient optimization'.

- Adaboost increases the accuracy by giving more weightage to the target which is misclassified by the model. At each iteration, Adaptive boosting algorithm changes the sample distribution by modifying the weights attached to each of the instances. It increases the weights of the wrongly predicted instances and decreases the ones of the correctly predicted instances.

- AdaBoost use simple stumps as learners, while the fixed size trees of GBM are usually of maximum leaf number between 8 and 32;

- Adaboost corrects its previous errors by tuning the weights for every incorrect observation in every iteration, but gradient boosting aims at fitting a new predictor in the residual errors committed by the preceding predictor.

3. Random Forest vs GBM

- GBMs are harder to tune than RF. There are typically three parameters: number of trees, depth of trees and learning rate, and each tree built is generally shallow.

- RF is harder to overfit than GBM.

- RF runs in parallel while GBM runs in sequence

4. Application

- A great application of GBM is anomaly detection in supervised learning settings where data is often highly unbalanced such as DNA sequences, credit card transactions or cybersecurity.

5. Sample Code implementation

1 | import numpy as np |

XGBoost

An optimized GBM

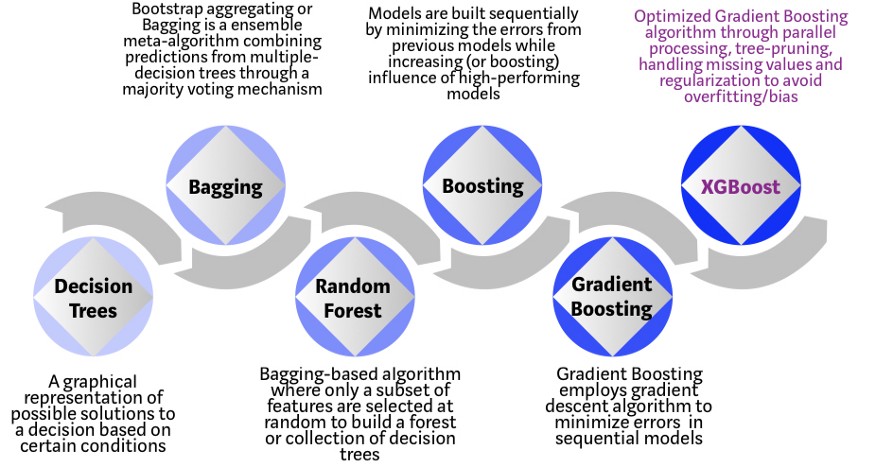

Evolution of XGBoost from Decision Tree

Procedure:

For Regression

- Set initial value

(by default is 0.5 [for both regression and classification]) - Build the first XGBoost Tree (a unique type of regression tree):

- Start with a root containing all the residuals

; - Compute similarity score

where is the Regularization parameter. - Make a decision on spliting condition: For each consecutive samples, compute the mean k of 2 input as the threshold for decision node; then split by the condition feature_value < k

- Decide the best thresold for spliting: Adopt the threshold that gives the largest Gain

- For example: we have points {

}: - Step 1: set the first threshold be

- Step 2: now left node has {x1}, right node has {x2, x3}, we have

and - Step 3: Compute

- Step 4: Compute the second threshold

and new Gain using this thresold - Step 5: Since gain of threshold 1 is greater than that of threshold 2, we use

as the spliting threshold

- Step 1: set the first threshold be

- If the leaf after spliting has > 1 residual, consider whether to split again (based on the residuals in the leaf);

- continue until it reaches the max_depth (default is 6) or no more spliting is possible

- Notes on

- A larger

leads to greater likelihood of prunning as the are lower; - The

reduce the prediction’s sensitivity to nodes with low # of observations

- A larger

- Start with a root containing all the residuals

- Prune the Tree

- From bottom branch up, decide on whether to prune the node/branch

: The threshold to determine if a Gain is large enough to be kept - if

then prune (remove the branch); - Note that setting

does not turn off prunnig!!!

- If we prune every branch until it reaches the root, then remove the tree;

- From bottom branch up, decide on whether to prune the node/branch

- Compute

- Compute New prediction

: Learning rate, default value = 0.3 : Output value of in each residual tree

- Compute the new residuals

for all samples, build the next tree and prune the tree; - Repeat the process just like Gradient Boost does; As more trees are built, the Gains will decease; We stop until the Gain < terminating value

For Classification

- Set initial value

(by default is 0.5) - Build the first XGBoost Tree:

- Start with a root containing all the residuals

- Compute similarity score

where is the Regularization parameter. - Repeat the same procedure as the regression does; Compute all the Gains

- Warning of

Cover:- defined for the minimum number of residuals in each leaf (by default is 1)

- in Regression: = # of Residuals in the leaf (always >= 1)

- in Classification: =

(not necessarily >= 1), hence some leafs violating the Coverthreshold will be removed. HereCoverneeds to be carefully chosen (like 0, 0.1, etc)

- Start with a root containing all the residuals

- Prune the tree: same procedure as Regression case

- Compute

- Compute New prediction

: Learning rate, default value = 0.3 : Output value of in each residual tree (here is the first tree)

- Convert

into using logistic regression: - Compute the new residuals

for all samples, build the next tree and prune the tree; - Repeat the process just like Gradient Boost does; As more trees are built, the Gains will decease; We stop until the Gain < terminating value;

- Prediction:

;

- Set initial value

1. Advantage of XGBoost

- Parallelized Tree Building

- Unlike GBM, XGBoost is able to build the sequential tree using a parallelized implementation

- This is possible due to the interchangeable nature of loops used for building base learners: the outer loop that enumerates the leaf nodes of a tree, and the second inner loop that calculates the features. This nesting of loops limits parallelization because without completing the inner loop (more computationally demanding of the two), the outer loop cannot be started. Therefore, to improve run time, the order of loops is interchanged using initialization through a global scan of all instances and sorting using parallel threads. This switch improves algorithmic performance by offsetting any parallelization overheads in computation.

- Tree Pruning using depth-first approach

- The stopping criterion for tree splitting within GBM framework is greedy in nature and depends on the negative loss criterion at the point of split. XGBoost uses 'max_depth' parameter as specified instead of criterion first, and starts pruning trees backward. (This 'depth-first' approach improves computational performance significantly.)

- Cache awareness and out-of-core computing

- allocating internal buffers in each thread to store gradient statistics. Further enhancements such as 'out-of-core' computing optimize available disk space while handling big data-frames that do not fit into memory.

- Regularization

- It penalizes more complex models through both LASSO (L1) and Ridge (L2) regularization to prevent overfitting.

- Efficient Handling of missing data

- XGboost decides at training time whether missing values go into the right or left node. It chooses which to minimise loss. If there are no missing values at training time, it defaults to sending any new missings to the right node.

- In-built cross-validation capability

- The algorithm comes with built-in cross-validation method at each iteration, taking away the need to explicitly program this search and to specify the exact number of boosting iterations required in a single run.

LightGBM

- A follow-up (and competitor) from XGBoost

- Generally the same as GBM, except that a lot of optimizations are done, see this page to view all of them

- Light GBM grows tree vertically while other algorithm grows trees horizontally meaning that Light GBM grows tree leaf-wise while other algorithm grows level-wise. It will choose the leaf with max delta loss to grow. When growing the same leaf, Leaf-wise algorithm can reduce more loss than a level-wise algorithm.

- It is not advisable to use LGBM on small datasets. Light GBM is sensitive to overfitting and can easily overfit small data.

1. Advantages of Light GBM

- Faster training speed and higher efficiency: Light GBM use histogram based algorithm i.e it buckets continuous feature values into discrete bins which fasten the training procedure.

- Lower memory usage: Replaces continuous values to discrete bins which result in lower memory usage.

- Better accuracy than any other boosting algorithm: It produces much more complex trees by following leaf wise split approach rather than a level-wise approach which is the main factor in achieving higher accuracy. However, it can sometimes lead to overfitting which can be avoided by setting the max_depth parameter.

- Compatibility with Large Datasets: It is capable of performing equally good with large datasets with a significant reduction in training time as compared to XGBOOST.

- Parallel learning supported

2. Code sample: XGBoost vs LightGBM

1 | #importing standard libraries |

3. General Pros and cons of boosting

Pros

- Achieves higher performance than bagging when hyper-parameters tuned properly.

- Can be used for classification and regression equally well.

- Easily handles mixed data types.

- Can use “robust” loss functions that make the model resistant to outliers.

Cons

- Difficult and time consuming to properly tune hyper-parameters.

- Cannot be parallelized like bagging (bad scalability when huge amounts of data).

- More risk of overfitting compared to bagging.

Conclusion

Here we end the discussion about ensemble models. It was a fun and challenging topic. While most users of these model won't need to understand every nitty-gritty of these models, these profound theories laid significant foundations for future research on supervised ensemble learning models (and even meta-learning). In the next month, I'll share some posts about unsupervised learning. This is even large a topic, and I expect the content to be even deeper. Good luck, me and everyone!

Ensemble Models: Boosting Techniques

https://criss-wang.github.io/post/blogs/supervised/ensemble-2/