Neural Network Applied: Optimizer Selection

Background

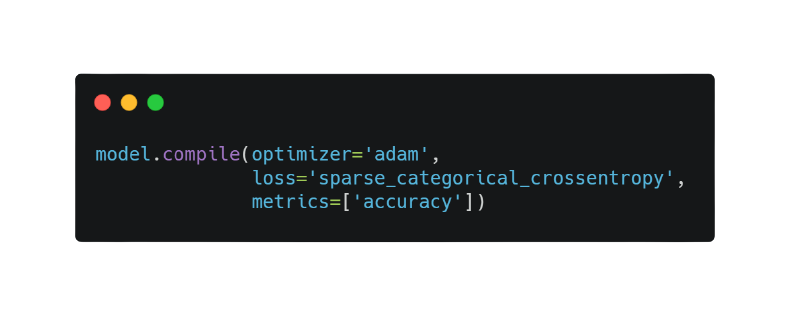

As one starts to use Neural Networks in their data models, he will inevitably encounter code of form like this:

One might be quickly puzzled by the 3 terms optimizer, adam and sparse_categorical_crossentropy here. The first 2 are part of this blog's focus, which is about the optimization strategy applied in a Neural Network execution, and the sparse_categorical_crossentropy is a loss function used to help with the optimization.

To understand the relevance of optimizer, one must first understand how an NN is trained. During the training of an NN, the weights of each neuron keeps getting updated so that the loss can be minimized. However, randomly updating the weights is not really feasible as there are hundreds of thousands of weights. Hence our smart scientists came up with a backward propagation (BP) algorithm for updating the weights. One may learn more about BP here. Behind BP we now require the optimizer to facilitate the updating of weights in each iteration.

Right below we discuss a few most commonly used optimizers:

Gradient Descent

Gradient Descent is the most basic optimization strategy which is based on the first order derivative of a loss function. The first order derivative serves as a guide on the direction to modify the weight so as to minimize the loss function. We've discussed its variant in details in an earlier post. To refresh our memory and make this blog more coherent, let's quickly recap here.

Analytic form:

Characteristics of Gradient Descent include:

- It's used heavily in linear regression and classification algorithms.

- Easy computation and implementatoin (

Pros) - May trap at local minima (

Cons) - Weights are changed only after calculating gradient on the whole dataset. So, if the dataset is too large then the convergence may take very long time (

Cons) - Requires large memory to calculate gradient on the whole dataset (

Cons)

Stochastic Gradient Descent

Gradient Descent has the problem of calculate gradient on the whole dataset in each itearation for weight update. Here Stochastic Gradient Descent aims to resolve this issue by processing data in random batches.

As the model parameters are frequently updated parameters have high variance and fluctuations in loss functions at different intensities.

Analytic form:

Characteristics of SGD include:

- The learning rate needs to be updated in each iteartion to aviod over-fitting

- Faster convergence rate and less memory used (

Pros) - High variance in model parameters. (

Cons) - May continue to run even when global minima is achieved. (

Cons) - To reduce the variance we further have the

mini-batch Gradient Descentwhich divides the data into mutiple batches and updates the model parameters after every batch (vs 1 data entry per update in SGD).

In general, Gradient Descent method has the challenge of

- Choosing an optimum value of the learning rate. If the learning rate is too small than gradient descent may take ages to converge.

- Have a constant learning rate for all the parameters. There may be some parameters which we may not want to change at the same rate.

- May get trapped at local minima.

Momentum

Momentum was invented for reducing high variance in SGD and softens the convergence. It takes advantage of information from previous directions via a formula

Analytic form:

Characteristics of Momentum include:

- The momentum term

is usually set to 0.9 or a similar value. - Faster Convergence and smaller variance (

pros) - Less Oscilliation & more smooth shifting of direction (

pros)

Adagrad

Often, the learning rate Adagrad aims to achieve such effect. If we use low learning rates for parameters associated with most frequently occurring features, and high learning rates for parameters associated with infrequent features. We can get a good model.

Analytic form:

where

Here

Characteristics of Adagrad include:

- Learning rate changes for each training parameter.

- Don't need to manually tune the learning rate. (

pros) - Able to train and performs well on sparse data. (

pros) - Computationally expensive as a need to calculate the second order derivative. (

cons) - Learning rate is monotone decreasing as iteration

increases. ( cons)

AdaDelta

It is an extension of AdaGrad which tends to remove the decaying learning Rate problem of it. Instead of accumulating all previously squared gradients, Adadelta limits the window of accumulated past gradients to some fixed size

By the idea above, we reducing the window size of

Analytic form:

Characteristics of AdaDelta include:

- Learning rate does not decay necessarily (

pros) - More computationally expensive as expectation is involved (

cons)

RMSProp

The RMSProp algorithm full form is called Root Mean Square Prop, which is an adaptive learning rate optimization algorithm proposed by Geoffery Hinton.

RMSProp is another strategy that tries to resolve Adagrad's radically diminishing learning rates problem by using a moving average of the squared gradient. It utilizes the magnitude of the recent gradient descents to normalize the gradient.

While Adagrad accumulates all previous gradient squares, RMSprop just calculates the corresponding average value, so it can eliminate the problem of quickly dropping of learning rate of the Adagrad.

By the idea above, we replace the

Analytic form:

Conclusion for the dynamic learning rate optimizer:

- Good for sparse data

- Be careful of the diminishing speed of learning rate

- More expensive computationally in general

Adam

Adam (Adaptive Moment Estimation) works with momentums of first and second order. The intuition behind the Adam is that we don't want to roll so fast just because we can jump over the minimum, we want to decrease the velocity a little bit for a careful search. In addition to storing an exponentially decaying average of past squared gradients like AdaGrad, Adam also keeps an exponentially decaying average of past gradients M(t). In summary, Adam can be looked at as a combination of RMSprop and Stochastic Gradient Descent with momentum.

Note that although the name momentum looks fancy, the terms we need to consider are just first and second order momentum, which are essentially mean and variance of the gradients. Afterwards, we can consider 2 terms

Hence the formula is as follows:

These 2 terms are used to approximate the first and second moments, that is:

Although we have

After bias correction, we derive the terms

Here

Finally the update formula is just

Neural Network Applied: Optimizer Selection

https://criss-wang.github.io/post/blogs/mlops/nn-optimizers/