Gradient Descent Algorithm and Its Variants!

Overview of Gradient Descent

Optimization refers to the task of minimizing/maximizing an objective function

- Find the global minimum of the objective function. This is feasible if the objective function is convex, i.e. any local minimum is a global minimum.

- Find the lowest possible value of the objective function within its neighbor. That’s usually the case if the objective function is not convex as the case in most deep learning problems.

There are three kinds of optimization algorithms:

- Optimization algorithm that is not iterative and simply solves for one point.

- Optimization algorithm that is iterative in nature and converges to acceptable solution regardless of the parameters initialization such as gradient descent applied to logistic regression.

- Optimization algorithm that is iterative in nature and applied to a set of problems that have non-convex cost functions such as neural networks. Therefore, parameters’ initialization plays a critical role in speeding up convergence and achieving lower error rates.

Gradient Descent is the most common optimization algorithm in machine learning and deep learning. It is a first-order optimization algorithm. This means it only takes into account the first derivative when performing the updates on the parameters. On each iteration, we update the parameters in the opposite direction of the gradient of the objective function

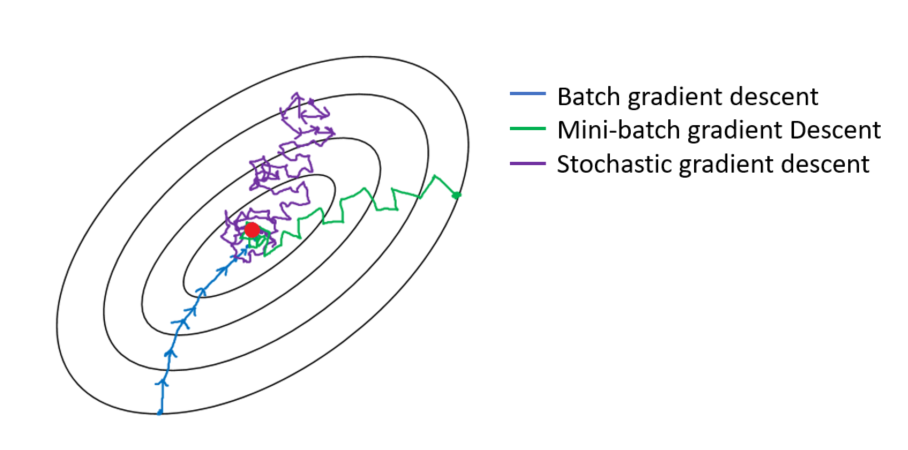

In this notebook, we’ll cover gradient descent algorithm and its variants: Batch Gradient Descent, Mini-batch Gradient Descent, and Stochastic Gradient Descent.

Let’s first see how gradient descent and its associated steps works on logistic regression before going into the details of its variants. For the sake of simplicity, let’s assume that the logistic regression model has only two parameters: weight

Initialize weight

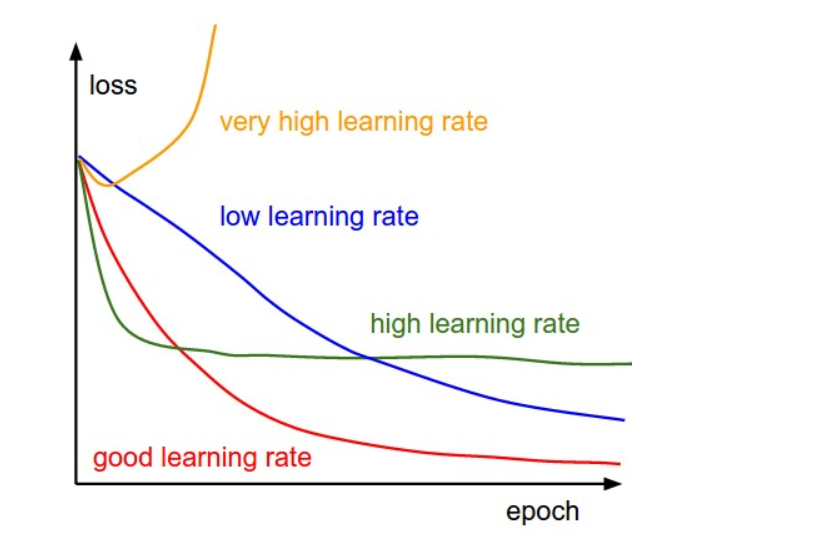

and bias to any random numbers. Pick a value for the learning rate

. The learning rate determines how big the step would be on each iteration. If

is very small, it would take long time to converge and become computationally expensive. IF

is large, it may fail to converge and overshoot the minimum. Therefore, plot the cost function against different values of

and pick the value of that is right before the first value that didn’t converge so that we would have a very fast learning algorithm that converges (Figure 1).

Figure 2 The most commonly used rates are : 0.001, 0.003, 0.01, 0.03, 0.1, 0.3.

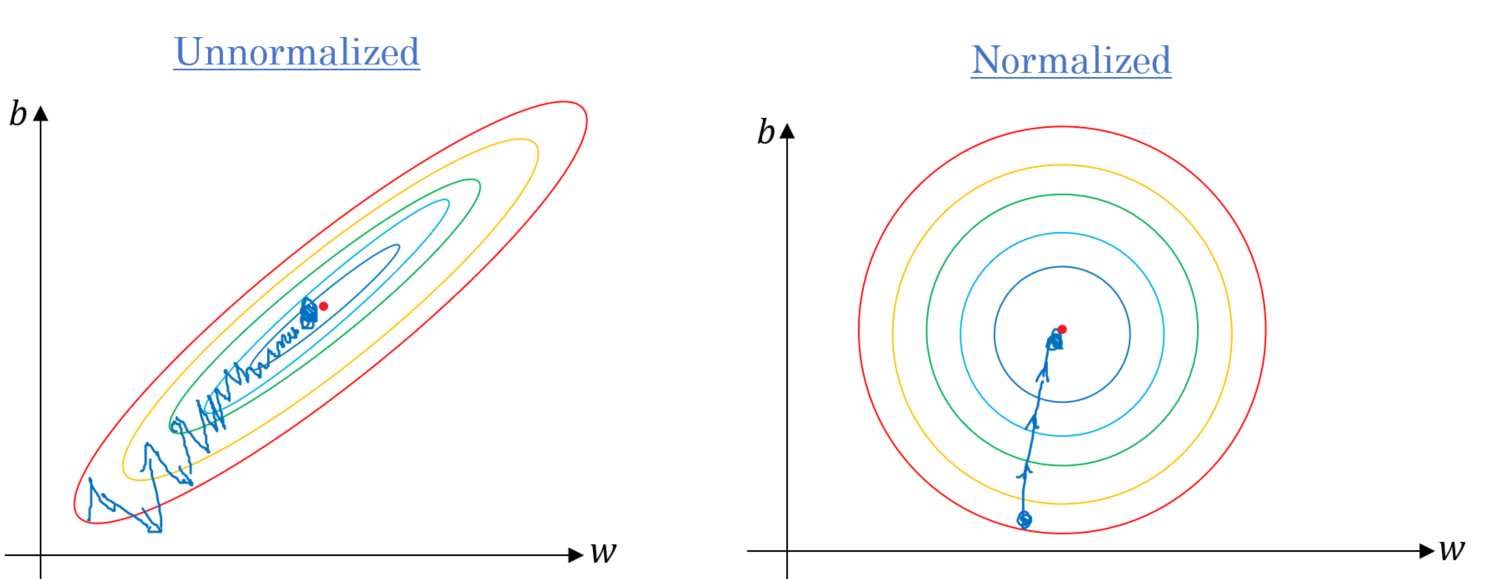

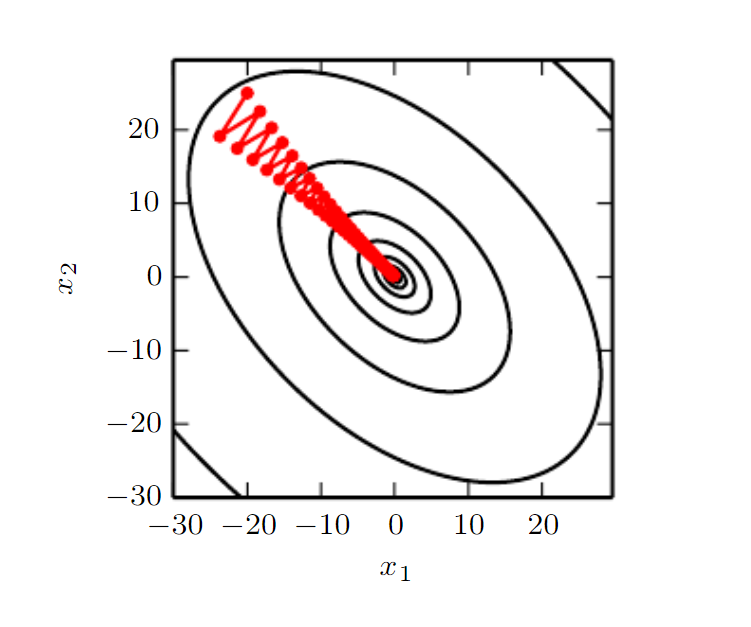

Make sure to scale the data if it’s on very different scales. If we don’t scale the data, the level curves (contours) would be narrower and taller which means it would take longer time to converge (Figure 2).

Figure 2 Scale the data to have

and . Below is the formula for scaling each example:

On each iteration, take the partial derivative of the cost function

The update equations are:

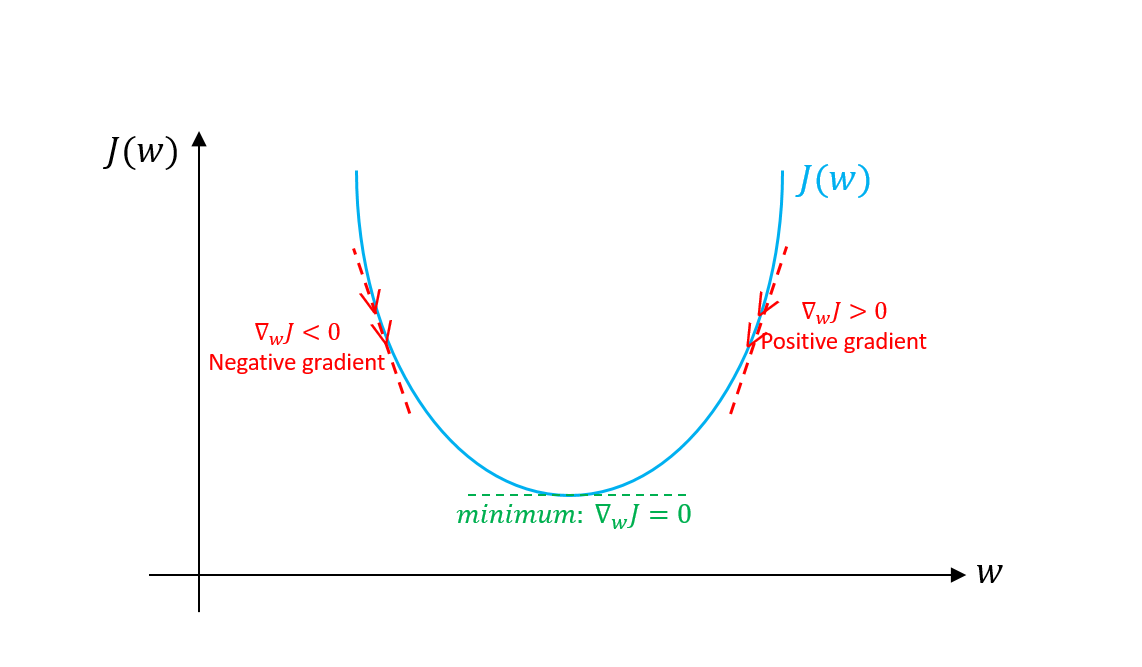

- For the sake of illustration, assume we don’t have bias. If the slope of the current values of

Figure 3 - Continue the process until the cost function converges. That is, until the error curve becomes flat and doesn’t change.

- In addition, on each iteration, the step would be in the direction that gives the maximum change since it’s perpendicular to level curves at each step.

- For the sake of illustration, assume we don’t have bias. If the slope of the current values of

Now let’s discuss the three variants of gradient descent algorithm. The main difference between them is the amount of data we use when computing the gradients for each learning step. The trade-off between them is the accuracy of the gradient versus the time complexity to perform each parameter’s update (learning step).

Batch Gradient Descent

Batch Gradient Descent is when we sum up over all examples on each iteration when performing the updates to the parameters. Therefore, for each update, we have to sum over all examples:

1 | for i in range(num_epochs): |

The main advantages:

- We can use fixed learning rate during training without worrying about learning rate decay.

- It has straight trajectory towards the minimum and it is guaranteed to converge in theory to the global minimum if the loss function is convex and to a local minimum if the loss function is not convex.

- It has unbiased estimate of gradients. The more the examples, the lower the standard error.

The main disadvantages:

- Even though we can use vectorized implementation, it may still be slow to go over all examples especially when we have large datasets.

- Each step of learning happens after going over all examples where some examples may be redundant and don’t contribute much to the update.

Mini-Batch Gradient Descent

Instead of going over all examples, Mini-batch Gradient Descent sums up over lower number of examples based on batch size. Therefore, learning happens on each mini-batch of

- Shuffle the training dataset to avoid pre-existing order of examples.

- Partition the training dataset into

1 | for i in range(num_epochs): |

The batch size is something we can tune. It is usually chosen as power of 2 such as 32, 64, 128, 256, 512, etc. The reason behind it is because some hardware such as GPUs achieve better runtime with common batch sizes such as power of 2.

The main advantages:

- Faster than Batch version because it goes through a lot less examples than Batch (all examples).

- Randomly selecting examples will help avoid redundant examples or examples that are very similar that don’t contribute much to the learning.

- With batch size < size of training set, it adds noise to the learning process that helps improving generalization error.

- Even though with more examples the estimate would have lower standard error, the return is less than linear compared to the computational burden we incur.

The main disadvantages:

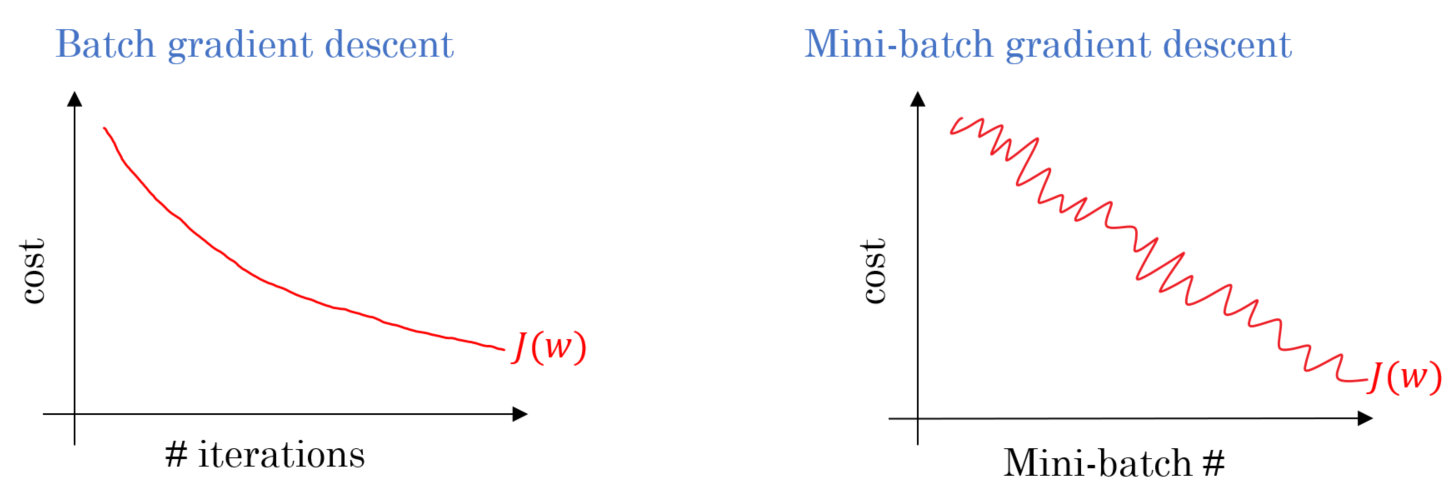

It won’t converge. On each iteration, the learning step may go back and forth due to the noise. Therefore, it wanders around the minimum region but never converges.

Due to the noise, the learning steps have more oscillations (see figure 5) and requires adding learning-decay to decrease the learning rate as we become closer to the minimum.

Figure 4

With large training datasets, we don’t usually need more than 2-10 passes over all training examples (epochs). Note: with batch size

Stochastic Gradient Descent

Instead of going through all examples, Stochastic Gradient Descent (SGD) performs the parameters update on each example

- Shuffle the training dataset to avoid pre-existing order of examples.

- Partition the training dataset into

1 | for i in range(num_epochs): |

It shares most of the advantages and the disadvantages with mini-batch version. Below are the ones that are specific to SGD:

- It adds even more noise to the learning process than mini-batch that helps improving generalization error. However, this would increase the run time.

- We can’t utilize vectorization over 1 example and becomes very slow. Also, the variance becomes large since we only use 1 example for each learning step.

Below is a graph that shows the gradient descent’s variants and their direction towards the minimum:

As the figure above shows, SGD direction is very noisy compared to mini-batch.

Areas for advancement

Below are some challenges regarding gradient descent algorithm in general as well as its variants - mainly batch and mini-batch:

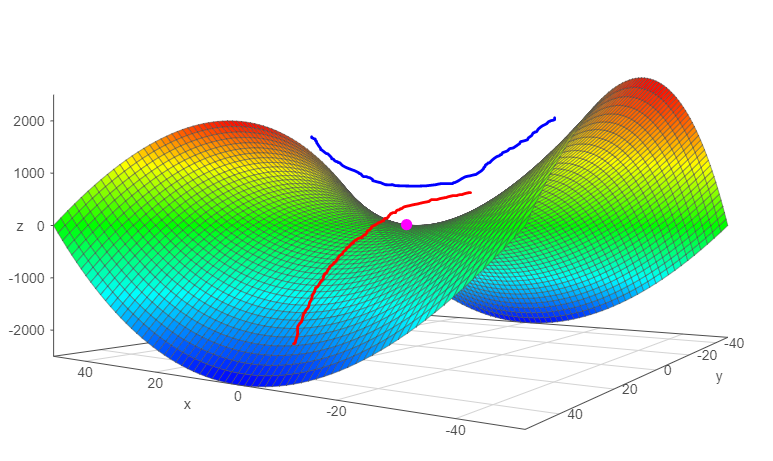

Gradient descent is a first-order optimization algorithm, which means it doesn’t take into account the second derivatives of the cost function. However, the curvature of the function affects the size of each learning step. The gradient measures the steepness of the curve but the second derivative measures the curvature of the curve. Therefore, if:

- Second derivative = 0

- Second derivative > 0

- Second derivative < 0

As a result, the direction that looks promising to the gradient may not be so and may lead to slow the learning process or even diverge.

- Second derivative = 0

If Hessian matrix has poor conditioning number, i.e. the direction of the most curvature has much more curvature than the direction of the lowest curvature. This will lead the cost function to be very sensitive in some directions and insensitive in other directions. As a result, it will make it harder on the gradient because the direction that looks promising for the gradient may not lead to big changes in the cost function.

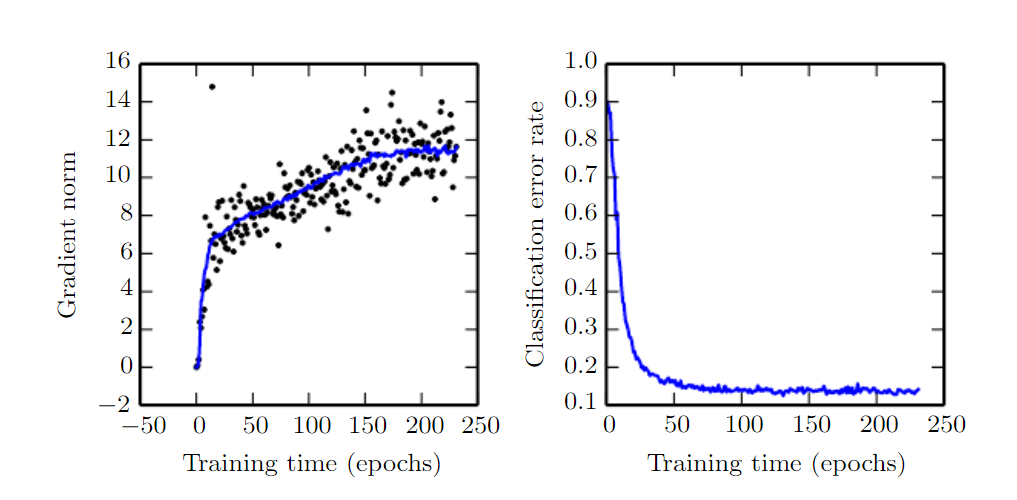

The norm of the gradient

In small dimensions, local minimum is common; however, in large dimensions, saddle points are more common. Saddle point is when the function curves up in some directions and curves down in other directions. In other words, saddle point looks a minimum from one direction and a maximum from other direction (see figure 9). This happens when at least one eigenvalue of the hessian matrix is negative and the rest of eigenvalues are positive.

As discussed previously, choosing a proper learning rate is hard. Also, for mini-batch gradient descent, we have to adjust the learning rate during the training process to make sure it converges to the local minimum and not wander around it. Figuring out the decay rate of the learning rate is also hard and changes with different datasets.

All parameter updates have the same learning rate; however, we may want to perform larger updates to some parameters that have their directional derivatives more inline with the trajectory towards the minimum than other parameters.

Gradient Descent Algorithm and Its Variants!

https://criss-wang.github.io/post/blogs/mlops/gradient-descent/